- A Slot Machine Reinforces A Gambler On A Schedule For Today

- A Slot Machine Reinforces A Gambler On A Scheduled

- A Slot Machine Reinforces A Gambler On A Schedule 2019

- A Slot Machine Reinforces A Gambler On A Schedule Of Events

For instance, slot machines at casinos operate on partial schedules. They provide money (positive reinforcement) after an unpredictable number of plays (behavior). Hence, slot players are likely to continuously play slots in the hopes that they will gain money the next round (Myers, 2011).

By a continuous reinforcement schedule, we mean that each correct response is reinforced A gambler playing a slot machine is being reinforced on which schedule of reinforcement? Slot machine designers know a lot about human behavior, and how it is influenced by experience (learning). They are required by law to give out on average a certain percentage of the amount put in over time (say 90% payout), but the schedule on which a slot machine's reinforcement is delivered is very carefully programmed in and planned (mainly.

So who pays for those Volcanic eruptions? Pirate Battles? Carnival Parades? and Glittering Showrooms? Slot Machines. 60-65% of casino revenue is generated by those bell-ringing one armed bandits that seem to multiply on casino floors like rabbits. So how does the average player gain an advantage and possibly win? Well... aside from cheating (which we really don't suggest you get involved in) the only way to gain some sort of advantage is to choose your slots with utmost care and discrimination.

Slot machines in Las Vegas are required by law to payout 75% of the money that goes into them, actual payout in Las Vegas is approximately 95%. Will you be the one that takes the money instead of gives it? That is up to luck, but with a little investigation one can easily learn to identify which machines are more favorable to the player than others. Slot machines are all about the payout... Red White and Blue, Double Diamond, Dick Fucking Clark, Cherry whatever. At the end of the day what every slot player needs to do is look at the pay schedule on the machine they want to play. Very often the same machine one row over will pay 5,000 credits on 3rd credit jackpot while you're playing on a 2,000 3rd credit machine. Plain and simple you're cheating yourself.

Slot Spotlights

A few notable slot machines we here at VT have found more playable, or more interesting, than the other nonsense out there like Leprachaun's Gold or Tabasco Slots or whatever.

We like machines that have the best payouts on the lowest winning spins. These will keep you going longer between larger wins and not enact the ATM-In-Reverse principle seen at many of the larger joints (Venetian being the worst we've experienced).

100 or Nothing

Red, White and Blue

Slot Machine Jackpots Photo Gallery

Wheel of Fortune

Wild Cherry

Slot Machine Payback Percentages

A Slot Machine Reinforces A Gambler On A Schedule For Today

Below are the slot payback percentages for Nevada's fiscal year beginning July 1, 2002 and ending June 30, 2003:

5¢ Slot Machines

The Strip - 90.32%

Downtown - 91.50%

Boulder Strip - 93.03%

N. Las Vegas - 92.97%

25¢ Slot Machines

The Strip - 92.59%

Downtown - 94.83%

Boulder Strip - 96.47%

N. Las Vegas - 96.63%

$1 Slot Machines

The Strip - 94.67%

Downtown - 95.35%

Boulder Strip - 96.48%

N. Las Vegas - 97.21%

$1 Megabucks Machines

The Strip - 89.12%

Downtown - 88.55%

Boulder Strip - 87.76%

N. Las Vegas - 89.41%

$5 Slot Machines

The Strip - 95.33%

Downtown - 95.61%

Boulder Strip - 96.53%

N. Las Vegas - 96.50%

All Slot Machines

The Strip - 93.85%

Downtown - 94.32%

Boulder Strip - 95.34%

N. Las Vegas - 95.32%

The Math of Casino Slot Machines

For every dollar you wager in a slot machine, you will lose 100% - Payback% of that dollar. For example, you're at Bellagio playing the $1 Double Diamond slot, wagering Two Credits ($2) per spin. According to the table, for every $2 spin you will lose 5.33% of that bet... just shy of 11¢. Granted these 11 cents don't get extracted instantly... this is computed over time. So if your bank roll limit is $10 it will take you, on average 52 spins before your bankroll is toast (under $1) and you are out of credits.

How was this number derived:

STAKE x (Payback Percentages) = STAKE x (Payback Percentages) = STAKE x (Payback Percentages) = ...

Repeat calculation until the number gets below the minimum bet - if you play long enough, you're gonna go broke. THAT is a FACT. Slot machines are entertaining, relaxing, require little thought beyond pressing a button. IF you want to truly GAMBLE, you might want to look into Video Poker, and eventually Blackjack as other options.

Granted, sometime in there you just might hit that $500 win on the Wheel of Fortune, or The Elvis progressive might shake rattle and roll $1000 your way... but the math inside the machine determines that you will in fact lose a certain percentage of your wager on each spin, and the more you spin... the more you will lose despite short runs of successful jackpots. If you find you are UP... leave. Every spin of a slot machine generates a random number that has NOTHING to do with previous numbers. SLOT MACHINES do NOT run in streaks (even if you might wish to think they do). Don't expect to get any of the money you put into a machine out of it unless you learn to press the CASH OUT button.

Gamblers Library

VT fully recommends the following books to help you decrease the house advantage on casino card games.

Buy used from Amazon.com and save big bucks!

Schedules of reinforcement can affect the results of operant conditioning, which is frequently used in everyday life such as in the classroom and in parenting. Let’s examine the common types of schedule and their applications.

Table of Contents

Schedules Of Reinforcement

Operant conditioning is the procedure of learning through association to increase or decrease voluntary behavior using reinforcement or punishment.

Schedules of reinforcement are the rules that control the timing and frequency of reinforcer delivery to increase the likelihood a target behavior will happen again, strengthen or continue.

A schedule of reinforcement is a contingency schedule. The reinforcers are only applied when the target behavior has occurred, and therefore, the reinforcement is contingent on the desired behavior1.

There are two main categories of schedules: intermittent and non-intermittent.

Non-intermittent schedules apply reinforcement, or no reinforcement at all, after each correct response while intermittent schedules apply reinforcers after some, but not all, correct responses.

Non-intermittent Schedules of Reinforcement

Two types of non-intermittent schedules are Continuous Reinforcement Schedule and Extinction.

Continuous Reinforcement

A continuous reinforcement schedule (CRF) presents the reinforcer after every performance of the desired behavior. This schedule reinforces target behavior every single time it occurs, and is the quickest in teaching a new behavior.

A Slot Machine Reinforces A Gambler On A Scheduled

Continuous Reinforcement Examples

e.g. Continuous schedules of reinforcement are often used in animal training. The trainer rewards the dog to teach it new tricks. When the dog does a new trick correctly, its behavior is reinforced every time by a treat (positive reinforcement).

e.g. A continuous schedule also works well with very young children teaching them simple behaviors such as potty training. Toddlers are given candies whenever they use the potty. Their behavior is reinforced every time they succeed and receive rewards.

Partial Schedules of Reinforcement (Intermittent)

Once a new behavior is learned, trainers often turn to another type of schedule – partial or intermittent reinforcement schedule – to strengthen the new behavior.

A partial or intermittent reinforcement schedule rewards desired behaviors occasionally, but not every single time.

Behavior intermittently reinforced by a partial schedule is usually stronger. It is more resistant to extinction (more on this later). Therefore, after a new behavior is learned using a continuous schedule, an intermittent schedule is often applied to maintain or strengthen it.

Many different types of intermittent schedules are possible. The four major types of intermittent schedules commonly used are based on two different dimensions – time elapsed (interval) or the number of responses made (ratio). Each dimension can be categorized into either fixed or variable.

The four resulting intermittent reinforcement schedules are:

- Fixed interval schedule (FI)

- Fixed ratio schedule (FR)

- Variable interval schedule (VI)

- Variable ratio schedule (VR)

Fixed Interval Schedule

Interval schedules reinforce targeted behavior after a certain amount of time has passed since the previous reinforcement.

A fixed interval schedule delivers a reward when a set amount of time has elapsed. This schedule usually trains subjects, person, animal or organism, to time the interval, slow down the response rate right after a reinforcement and then quickly increase towards the end of the interval.

A “scalloping” pattern of break-run behavior is the characteristic of this type of reinforcement schedule. The subject pauses every time after the reinforcement is delivered and then behavior occurs at a faster rate as the next reinforcement approaches2.

Fixed Interval Example

College students studying for final exams is an example of the Fixed Interval schedule.

Most universities schedule fixed interval in between final exams.

Many students whose grades depend entirely on the exam performance don’t study much at the beginning of the semester, but they cram when it’s almost exam time.

Here, studying is the targeted behavior and the exam result is the reinforcement given after the final exam at the end of the semester.

Because an exam only occurs at fixed intervals, usually at the end of a semester, many students do not pay attention to studying during the semester until the exam time comes.

Variable Interval Schedule (VI)

A variable interval schedule delivers the reinforcer after a variable amount of time interval has passed since the previous reinforcement.

This schedule usually generates a steady rate of performance due to the uncertainty about the time of the next reward and is thought to be habit-forming3.

Variable Interval Example

Students whose grades depend on the performance of pop quizzes throughout the semester study regularly instead of cramming at the end.

Students know the teacher will give pop quizzes throughout the year, but they cannot determine when it occurs.

Without knowing the specific schedule, the student studies regularly throughout the entire time instead of postponing studying until the last minute.

Variable interval schedules are more effective than fixed interval schedules of reinforcement in teaching and reinforcing behavior that needs to be performed at a steady rate4.

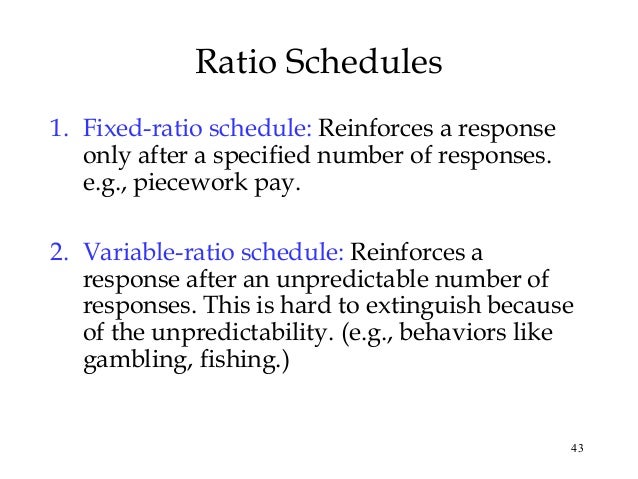

Fixed Ratio Schedule (FR)

A fixed ratio schedule delivers reinforcement after a certain number of responses are delivered.

Fixed ratio schedules produce high rates of response until a reward is received, which is then followed by a pause in the behavior.

Fixed Ratio Example

A toymaker produces toys and the store only buys toys in batches of 5. When the maker produces toys at a high rate, he makes more money.

In this case, toys are only required when all five have been made. The toy-making is rewarded and reinforced when five are delivered.

People who follow such a fixed ratio schedule usually take a break after they are rewarded and then the cycle of fast-production begins again.

Variable Ratio Schedule (VR)

Variable ratio schedules deliver reinforcement after a variable number of responses are made.

This schedule produces high and steady response rates.

Variable Ratio Example

A Slot Machine Reinforces A Gambler On A Schedule 2019

Gambling at a slot machine or lottery games is a classic example of a variable ratio reinforcement schedule5.

Gambling rewards unpredictably. Each winning requires a different number of lever pulls. Gamblers keep pulling the lever many times in hopes of winning. Therefore, for some people, gambling is not only habit-forming but is also very addictive and hard to stop6.

A Slot Machine Reinforces A Gambler On A Schedule Of Events

Extinction

An extinction schedule (Ext) is a special type of non-intermittent reinforcement schedule, in which the reinforcer is discontinued leading to a progressive decline in the occurrence of the previously reinforced response.

How fast complete extinction happens depends partially on the reinforcement schedules used in the initial learning process.

Among the different types of reinforcement schedules, the variable-ratio schedule (VR) is the most resistant to extinction whereas the continuous schedule is the least7.

Schedules of Reinforcement in Parenting

Many parents use various types of reinforcement to teach new behavior, strengthen desired behavior or reduce undesired behavior.

A continuous schedule of reinforcement is often the best in teaching a new behavior. Once the response has been learned, intermittent reinforcement can be used to strengthen the learning.

Reinforcement Schedules Example

Let’s go back to the potty-training example.

When parents first introduce the concept of potty training, they may give the toddler a candy whenever they use the potty successfully. That is a continuous schedule.

After the child has been using the potty consistently for a few days, the parents would transition to only reward the behavior intermittently using variable reinforcement schedules.

Sometimes, parents may unknowingly reinforce undesired behavior.

Because such reinforcement is unintended, it is often delivered inconsistently. The inconsistency serves as a type of variable reinforcement schedule, leading to a learned behavior that is hard to stop even after the parents have stopped applying the reinforcement.

Variable Ratio Example in Parenting

When a toddler throws a tantrum in the store, parents usually refuse to give in. But once in a while, if they’re tired or in a hurry, they may decide to buy the candy, believing they will do it just that one time.

But from the child’s perspective, such concession is a reinforcer that encourages tantrum-throwing. Because the reinforcement (candy buying) is delivered at a variable schedule, the toddler ends up throwing fit regularly for the next give-in.

This is one reason why consistency is important in disciplining children.

Related: Discipline And Punishment

References

- Case DA, Fantino E. THE DELAY-REDUCTION HYPOTHESIS OF CONDITIONED REINFORCEMENT AND PUNISHMENT: OBSERVING BEHAVIOR. Journal of the Experimental Analysis of Behavior. Published online January 1981:93-108. doi:10.1901/jeab.1981.35-93

- Dews PB. Studies on responding under fixed-interval schedules of reinforcement: II. The scalloped pattern of the cumulative record. J Exp Anal Behav. Published online January 1978:67-75. doi:10.1901/jeab.1978.29-67

- DeRusso AL. Instrumental uncertainty as a determinant of behavior under interval schedules of reinforcement. Front Integr Neurosci. Published online 2010. doi:10.3389/fnint.2010.00017

- Schoenfeld W, Cumming W, Hearst E. ON THE CLASSIFICATION OF REINFORCEMENT SCHEDULES. Proc Natl Acad Sci U S A. 1956;42(8):563-570. https://www.ncbi.nlm.nih.gov/pubmed/16589906

- Dixon MR, Hayes LJ, Aban IB. Examining the Roles of Rule Following, Reinforcement, and Preexperimental Histories on Risk-Taking Behavior. Psychol Rec. Published online October 2000:687-704. doi:10.1007/bf03395378

- Redish AD, Jensen S, Johnson A, Kurth-Nelson Z. Reconciling reinforcement learning models with behavioral extinction and renewal: Implications for addiction, relapse, and problem gambling. Psychological Review. Published online July 2007:784-805. doi:10.1037/0033-295x.114.3.784

- Azrin NH, Lindsley OR. The reinforcement of cooperation between children. The Journal of Abnormal and Social Psychology. Published online 1956:100-102. doi:10.1037/h0042490